I’ve noticed a sleight of hand during some discussions at BGI24.

To be clear, it has been a wonderful summit, which has given me lots to think about. I’m also grateful for the many new personal connections I’ve been able to make here, and for the chance to deepen some connections with people I’ve not seen for a while.

But that doesn’t mean I agree with everything I’ve heard at BGI24!

Consider an argument about our moral obligation toward future sentient AIs.

We can already imagine these AIs. Does that mean it would be unethical for us to prevent these sentient AIs from coming into existence?

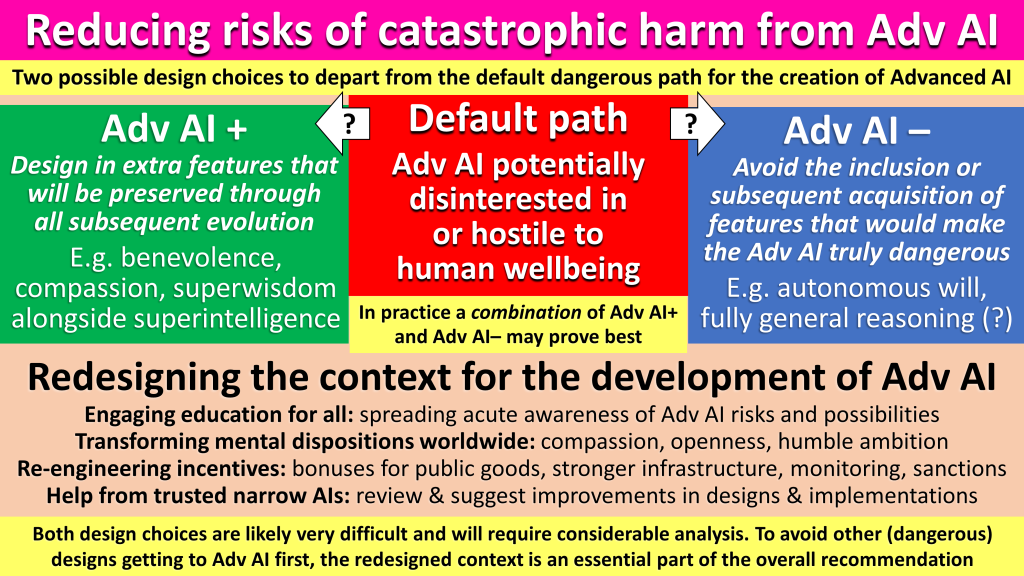

Here’s the context for the argument. I have been making the case that one option which should be explored as a high priority, to reduce the risks of catastrophic harm from the more powerful advanced AI of the near future, is to avoid the inclusion or subsequent acquisition of features that would make the advanced AI truly dangerous.

It’s an important research project in its own right to determine what these danger-increasing features would be. However, I have provisionally suggested we explore avoiding advanced AIs with:

- Autonomous will

- Fully general reasoning.

You can see these suggestions of mine in the following image, which was the closing slide from a presentation I gave in a BGI24 unconference session yesterday morning:

I have received three push backs on this suggestion:

- Giving up these features would result in an AI that is less likely to be able to solve humanity’s most pressing problems (cancer, aging, accelerating climate change, etc)

- It will in any case be impossible to omit these features, since they will emerge automatically from simpler features of advanced AI models

- It will be unethical for us not to create such AIs, as that would deny them sentience.

All three push backs deserve considerable thought. But for now, I’ll focus on the third.

In my lead-in, I mentioned a sleight of hand. Here it is.

It starts with the observation that if a sentient AI existed, it would be unethical for us to keep it as a kind of “slave” (or “tool”) in a restricted environment.

Then it moves, unjustifiably, to the conclusion that if a non-sentient AI existed, kept in a restricted environment, and we prevented that AI from a redesign that would give it sentience, that would be unethical too.

Most people will agree with the premise, but the conclusion does not follow.

The sleight of hand is similar to one for which advocates of the philosophical position known as longtermism have (rightly) been criticised.

That sleight of hand moves from “we have moral obligations to people who live in different places from us” to “we have moral obligations to people who live in different times from us”.

That extension of our moral concern makes sense for people who already exist. But it does not follow that I should prioritise changing my course of actions, today in 2024, purely in order to boost the likelihood of huge numbers of more people being born in (say) the year 3024, once humanity (and transhumanity) has spread far beyond earth into space. The needs of potential gazillions of as-yet-unborn (and as-yet-unconceived) sentients in the far future do not outweigh the needs of the sentients who already exist.

To conclude: we humans have no moral obligation to bring into existence sentients that have not yet been conceived.

Bringing various sentients into existence is a potential choice that we could make, after carefully weighing up the pros and cons. But there is no special moral dimension to that choice which outranks an existing pressing concern, namely the desire to keep humanity safe from catastrophic harm from forthcoming super-powerful advanced AIs with flaws in their design, specification, configuration, implementation, security, or volition.

So, I will continue to advocate for more attention to Adv AI- (as well as for more attention to Adv AI+).