For many years, the terms “AGI” and “ASI” have done sterling work, in helping to shape constructive discussions about the future of AI.

(They are acronyms for “Artificial General Intelligence” and “Artificial Superintelligence”.)

But I think it’s now time, if not to retire these terms, but to side-line them.

In their place, we need some new concepts. Tentatively, I offer PCAI, SEMTAI, and PHUAI:

(pronounced, respectively, “pea sigh”, “sem tie”, and “foo eye” – so that they all rhyme with each other and, also, with “AGI” and “ASI”)

- Potentially Catastrophic AI

- Science, Engineering, and Medicine Transforming AI

- Potentially Humanity-Usurping AI.

Rather than asking ourselves “when will AGI be created?” and “what will AGI do?” and “how long between AGI and ASI”?, it’s better to ask what I will call the essential questions about the future of AI:

- “When is PCAI likely to be created?” and “How could we stop these potentially catastrophic AI systems from being actually catastrophic?”

- “When is SEMTAI likely to be created?” and “How can we accelerate the advent of SEMTAI without also accelerating the advent of dangerous versions of PCAI or PHUAI?”

- “When is PHUAI likely to be created?” and “How could we stop such an AI from actually usurping humanity into a very unhappy state?”

The future most of us can agree as being profoundly desirable, I think, is one in which SEMTAI exists and is working wonders, transforming the disciplines of science, engineering, and medicine, so that we can all more quickly gain benefits such as:

- Improved, reliable, low-cost treatments for cancer, dementia, aging, etc

- Improved, reliable, low-cost abundant green energy – such as from controlled nuclear fusion

- Nanotech repair engines that can undo damage, not just in our human bodies, but in the wider environment

- Methods to successfully revive patients who have been placed into low-temperature cryopreservation.

If we can gain these benefits without the AI systems being “fully general” or “all-round superintelligent” or “independently autonomous, with desires and goals of its own”, then so much the better.

(Such systems might also be described as “limited superintelligence” – to refer to part of a discussion that took place at Conway Hall earlier this week – involving Connor Leahy (off screen in that part of the video, speaking from the audience), Roman Yampolskiy, and myself.)

Of course, existing AI systems have already transformed some important aspects of science, engineering, and medicine – witness the likes of AlphaFold from DeepMind. But I would reserve the term SEMTAI for more powerful systems that can produce the kinds of results numbered 1-4 above.

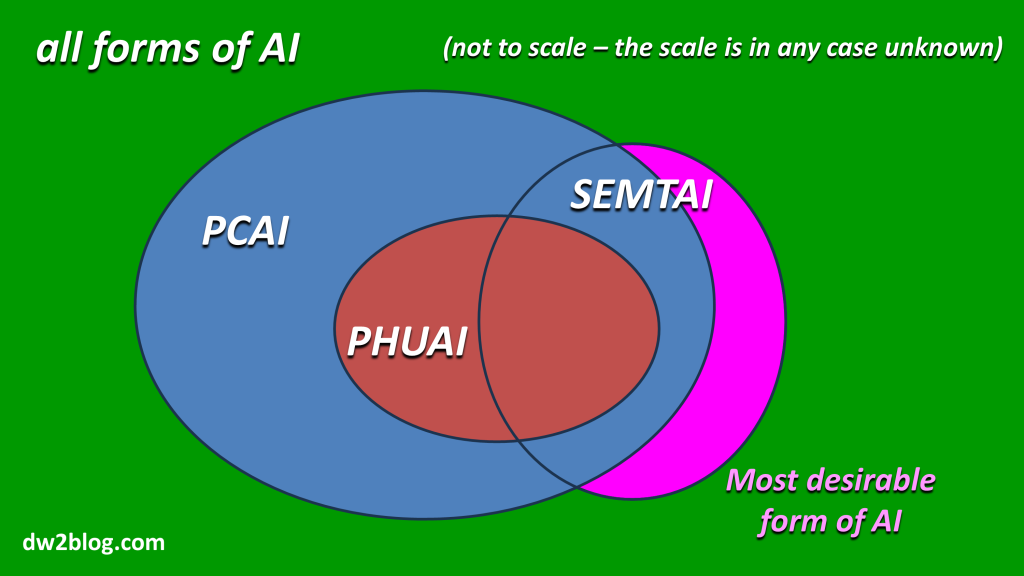

If SEMTAI is what is desired, what we most need to beware are PCAI – potentially catastrophic AI – and PHUAI – potentially humanity-usurping AI:

- PCAI is AI powerful enough to play a central role in the rapid deaths of, say, upward of 100 million people

- PHUAI is AI powerful enough that it could evade human attempts to constrain it, and could take charge of the future of the planet, having little ongoing regard for the formerly prominent status of humanity.

PHUAI is a special case of PCAI, but PCAI involves a wider set of systems:

- Systems that could cause catastrophe as the result of wilful abuse by bad actors (of which, alas, the world has far too many)

- Systems that could cause catastrophe as a side-effect of a mistake made by a “good actor” in a hurry, taking decisions out of their depth, failing to foresee all the ramifications of their choices, pushing out products ahead of adequate testing, etc

- Systems that could change the employment and social media scenes so quickly that terribly bad political decisions are taken as a result – with catastrophic consequences.

Talking about PCAI, SEMTAI, and PHUAI side-steps many of the conversational black holes that stymie productive discussions about the future of AI. For now on, when someone asks me a question about AGI or ASI, I will seek to turn the attention to one or more of these three new terms.

After all, the new terms are defined by the consequences (actual or potential) that would flow from these systems, not from assessments of their internal states. Therefore it will be easier to set aside questions such as

- “How cognitively complete are these AI systems?”

- “Do these systems truly understand what they’re talking about?”

- “Are the emotions displayed by these systems just fake emotions or real emotions?”

These questions are philosophically interesting, but it is the list of “essential questions” that I offered above which urgently demand good answers.

Footnote: just in case some time-waster says all the above definitions are meaningless since AI doesn’t exist and isn’t a well-defined term, I’ll answer by referencing this practical definition from the open survey “Anticipating AI in 2030” (a survey to which you are all welcome to supply your own answers):

A non-biological system can be called an AI if it, by some means or other,

- Can observe data and make predictions about future observations

- Can determine which interventions might change outcomes in particular directions

- Has some awareness of areas of uncertainty in its knowledge, and can devise experiments to reduce that uncertainty

- Can learn from instances when outcomes did not match expectations, thereby improving future performance.

It might be said that LLMs (Large Language Models) fall short of some aspects of this definition. But combinations of LLMs and other computational systems do fit the bill.

Image credit: The robots in the above illustration were generated by Midjourney. The illustration is, of course, not intended to imply that the actual AIs will be embodied in robots with such an appearance. But the picture hints at the likelihood that the various types of AI will have a great deal in common, and won’t be easy to distinguish from each other. (That’s the feature of AI which is sometimes called “multipurpose”.)

Brilliant article David, bravo! I will shift my terminology to the ones you outlined here. I’m SO tired of the never-ending debate about whether AGI is possible or not that inevitably pops up any time these topics are discussed. You have singlehandedly done away with that, and it say thank you!

Comment by Perpetual Mystic — 12 October 2023 @ 8:43 pm

Many thanks for the warm feedback, Perpetual Mystic! I appreciate it.

Comment by David Wood — 13 October 2023 @ 3:03 am

[…] soon as the phrase ‘AGI’ is mentioned, unhelpful philosophical debates break out.That’s why I have been suggesting new terms, such as PCAI, SEMTAI, and […]

Pingback by Transcendent questions on the future of AI: New starting points for breaking the logjam of AI tribal thinking - Mindplex — 18 December 2023 @ 4:16 pm