How likely is it that longevity escape velocity (LEV) will be achieved by, say, 2040?

In other words, how likely is it that, by 2040, biomedical interventions will be widely available that result in each adult becoming (if they wish) biologically younger – becoming systematically healthier and more resilient?

In that scenario, to give one illustration, adults who are aged 65 in 2050 will generally be healthier than they were at the age of 50 some 15 years earlier. They’ll be mentally sharper, with stronger muscles, a better immune system, cleaner arteries, and so on. That’s instead of them following the downward health spiral which has accompanied human existence throughout all of history so far – a spiral in which each additional year of life from middle age onward brings a decline in vitality and robustness, and an increase in the probability of death.

Members of the extended longevity community express a variety of degrees of optimism or pessimism on such questions. The pessimists highlight what they see as a lack of significant progress over recent decades: not a single person has reached the age of 120 this century. They also lament the apparent unfathomable complexity of the biological metabolism, and differences of opinion over theories of what actually causes aging. They may conclude that the chance of reaching LEV by 2040 is less than one percent.

In contrast to that pessimism, I believe there are strong grounds for optimism. That’s the subject of this essay.

To be clear, there’s no inevitabilism to my optimism. I offer a probability for success, rather than any certainty. Whether humanity makes it to LEV by 2040 still remains to be seen.

Theories of aging

It’s true that aging is complicated. However, we don’t need to understand all aspects of aging in order to reverse it. Nor do we need to map out a comprehensive diagram of all the relationships of cause and effect at the biochemical level. Nor to pinpoint all the interactions of every gene in every cell of the body. Nor to debate whether aging happens because of evolution or despite evolution. Nor whether aging is best understood from a “reductionist” perspective or a “holistic” perspective.

Instead, to my mind, we already understand enough. There are plenty of details still to be filled in, but we already understand the basic framework that can lead to the comprehensive reversal of aging.

I’m referring to the damage repair approach to ending aging. This approach views aging as the accumulation of damage at the cellular and biomolecular levels throughout our bodies, with that damage in turn reducing the vitality of bodily subsystems. Moreover, this approach maintains that our biological vitality can be restored by repeatedly intervening to remove or repair that damage before it reaches a critical level.

What needs to be researched, therefore, is the set of interventions that can be developed and applied to remove or repair biological damage, without having adverse side-effects on overall metabolism.

These interventions need to be understood at an engineering level rather than at a detailed scientific level. We need to ascertain that such-and-such interventions result in given observable reductions in cellular or biomolecular damage. The way in which damage accumulates before being removed or repaired is of secondary concern.

This approach involves categorizing different types of damage, where each type of damage is associated with one or more potential mechanisms that could repair or remove it. Examples include:

- A decline in the number or health of stem cells available – which could be addressed by the introduction of new stem cells

- An accumulation of cells that are in a senescent state – which could be addressed by bolstering the innate biological mechanisms that normally break down these senescent cells

- Damage to the long-lived proteins in the extra-cellular matrix that normally supports cells, with results such as the stiffening of arteries – which could be addressed by a variety of mechanisms including breaking crosslinks between adjacent proteins.

In this understanding, what needs to be done, to accelerate the advent of LEV, is to:

- Identify and research mechanisms that have the potential to remove or repair aspects of the damage

- Determine how these mechanisms might be applied in practice

- Consider and monitor for potential side-effects of these mechanisms, and, as required, design modifications or alternatives to them

- Consider and monitor for potential interactions between various such mechanisms.

This program was first suggested over twenty years ago. It was the subject of a major book published in 2007, Ending Aging: The Rejuvenation Breakthroughs that Could Reverse Human Aging in Our Lifetime, and it has been explored in a series of academic conferences held at various times from 2003 onward in Queens’ College Cambridge, San Francisco, Berlin, and Dublin. (Since you ask, the next one in that series is taking place in Dublin from 13-16 June.)

My own optimism that LEV might be achieved by 2040 is based on my assessment of the viability of this damage repair approach. In turn, that’s because I see:

- A wide set of potential damage repair interventions that deserve further study

- Early encouraging signs that damage repair can extend healthy lifespans in various species

- A general pattern that slow progress in a field can transition into a new phase with much faster progress

- Ways in which “breakthrough initiatives” can trigger such a phase transition for the project to achieve LEV.

I’ll now turn to each of these four points in sequence.

Damage repair mechanisms – plenty to explore

There are five basic sources of ideas for mechanisms to repair or remove damage at the cellular and biomolecular levels throughout the body:

- Identifying and improving the repair mechanisms that already work within the human body when we are younger – before these mechanisms lose their effectiveness

- Learning from the special self-repairing features of the small proportion of humans who are “superagers” in the sense that they reach the age of 95 without having suffered any of the usual age-related diseases such as heart disease, cancer, dementia, or stroke

- Learning from the fascinating self-repairing features of numerous species which avoid various age-related diseases, and which can retain their vitality for decades longer than other species with whom they share many other characteristics

- Learning from other regenerative features that various species possess, such as the regrowth of damaged limbs or organs, as well as the birth of a baby whose cells are aged zero from parents who can be many decades older

- New interventions that don’t exist anywhere in nature, but which can be introduced as a result of scientific analysis and engineering innovation (relatively simple examples are blood transfusions, and stents that can repair a narrowed or blocked blood vessel; more complicated examples involve nanobots and 3D printing).

Progress so far

Here are some pointers to descriptions of various results obtained so far from investigations of possible damage repair interventions – disappointments as well as successes:

- The seven links included in the table on this page, “A Reimagined Research Strategy for Aging” at the SENS Research Foundation

- The trials listed in “The Rejuvenation Roadmap” maintained by Lifespan IO

- The comprehensive survey of interventions catalogued at the “RAID – Rodent Aging Interventions Database” maintained by the LEV Foundation

- The AgingDB database of pharmacological trials targeting aging

- A recent review in Nature, “The long and winding road of reprogramming-induced rejuvenation”

- The TRIIM trials to rejuvenate the human thymus.

Some conclusions from this data are uncontentious:

- None of these treatments, so far, have resulted in animals passing the LEV threshold

- The extension of healthy lifespan achieved in these trials is generally less than 50%, and is usually significantly less than that

- Results obtained in experiments with shorter-lived animals, such as mice and rats, often do not translate into similar results with humans (or have not done so yet).

These conclusions would appear to bolster the case for pessimism mentioned earlier. However, they are by no means the entire story:

- The various trials indicate that at least some rejuvenation can be engineered, and that there are multiple ways of doing so

- Trials of combinations of different rejuvenation treatments (which might be expected to have more substantial results) are still at an early stage

- Nothing like a proof of impossibility has been found, or even seriously suggested

- The total amount of resources dedicated to this field is far below that in many other fields of scientific research; the field might, plausibly, be expected to make faster improvements if it gains more support.

Key to faster progress will be the removal of roadblocks. That’s the subject of the remainder of this essay.

The possibility of a phase change

Sometimes a field of technology or other endeavour remains relatively stagnant for decades, apparently making little progress, before bursting forward in a major new spurt of progress. Factors that can cause such a tipping point to such a phase change include:

- The availability of re-usable tools (such as improved microscopes, molecular assembly techniques, diagnostic tests, or reliable biomarkers of aging)

- The availability of important new sets of data (such as population-scale genomic analyses)

- The maturity of complementary technologies (such as a network of electrical recharging stations, to allow the wide adoption of electric vehicles; or a network of wireless towers, to allow the wide adoption of wireless phones)

- Vindication of particular theoretical ideas (such as the paramount importance of mechanisms of balance, in the earliest powered airplanes; or the germ theory for the transmission of infectious diseases)

- Results that demonstrate possibilities which previously seemed beyond feasibility (such as the first time someone ran a mile in under four minutes)

- Fear regarding a new competitive threat (such as the USSR launching Sputnik, which led to wide changes in the application of public funding in the United States)

- Fear regarding an impending disaster (such as the spread of Covid-19, which accelerated development of vaccines for coronaviruses)

- The availability of significant financial prizes (such as those provided by the XPrize)

- A change in the attitude of researchers about the attractiveness of working in the field

- A change in the public narrative regarding the importance of the field

- The different groups who are all trying to find solutions to problems in the field finding and committing to a productive new method of collaboration on what turns out to be core issues.

When such factors apply – especially in combination – it can transform the pace of a change in a field from “linear” or “incremental” to “exponential” or “disruptive”, meaning that progress which previously was forecast as requiring (say) 100 years of research might actually happen within (say) 15 years.

That’s a general pattern. Now let’s consider how it can apply to accelerating progress toward LEV.

The existing roadblocks

Based on my observations of the longevity community stretching back nearly twenty years, here are my own assessment of the roadblocks which are presently hindering progress toward LEV:

- Lack of funding for some of the experiments that would produce important new data, since commercial interests such as VCs see little prospect of them earning a financial return from supplying that funding.

- Some people who are in a position to supply funding to support important experiments choose not to do so, because they are dominated by a mindset (sometimes called “longevity myths” or the “pro-aging trance”) that it’s wrong to support significantly longer lifespans.

- More broadly: society as a whole assigns insufficient priority to the comprehensive prevention and reversal of age-related diseases.

- Some potential supporters are deterred by what they perceive as irresponsible or untrustworthy aspects of the longevity field (snake-oil solutions, uncritical claims, tedious infighting).

- There is disagreement or confusion about which experiments are most important; as a result, available funds are being misdirected into, for example, less useful “lifestyle research”.

- Related: there is no agreed list of which experiments (or other research) should be conducted next, once additional funds become available.

- In the absence of biometrics that are accepted as being good measurements of overall biological aging (as opposed to measuring only an aspect of biological aging), it’s hard to know whether treatments increase the life expectancy of any long-lived animal.

- It’s likely that pools of data already in existence contain important insights related to aging and its possible alleviation – namely biological and other health data about individuals as they age and pass through different experiences and treatments. However, much of this data is kept in proprietary or private databases and isn’t made available for scrutiny by other researchers. Especially with the greater power nowadays of data analysis tools such as deep learning, the potential for open analysis isn’t being achieved. (This is another example where commercial or personal concerns are preventing the development of public goods from which everyone would benefit.)

Given this analysis, let’s look at four initiatives that could coalesce to cause the kind of phase transition discussed above.

The breakthrough initiatives

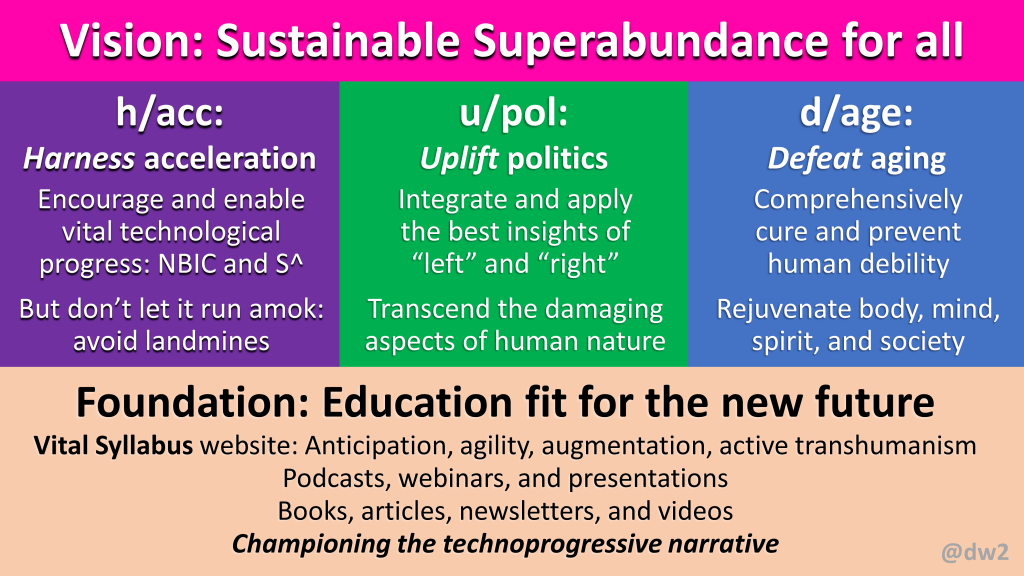

From one perspective, the breakthrough initiatives involve biomedical reengineering: projects such as the RMR (Robust Mouse Rejuvenation) study of combination interventions, designed and managed by the LEV Foundation. These are projects which have the potential to make the whole world wake up and pay attention.

But from another perspective, what most needs to change is the availability and application of sufficient funding to allow many such biomedical engineering projects to proceed in parallel. This can be termed the rejuvenation financial reengineering initiative – the initiative to direct more of the world’s vast financial resources toward these projects.

Taking one step further back, the financial reengineering will be facilitated by perhaps the most important initiative of all – namely narrative reengineering, altering the kinds of stories people in society tell themselves about the desirability of the comprehensive prevention and reversal of age-related diseases. Whereas today many people have an underlying insight that aging and death are deeply regrettable, they manage to persuade themselves (and each other) that there’s nothing that can be done about these trends, so that the appropriate response is to “accept what cannot be changed”. That is, they lack “the courage to change what can be changed”, in turn (to complete the citation of the so-called “serenity prayer” of Reinhold Niebuhr) because they lack the wisdom (or awareness) that such change is possible.

In parallel, important elements of community reengineering are required:

- To clarify which experiments and research have the biggest potential for dramatic results

- To avoid behaviours or statements which alienate or deter important potential supporters

- To reduce amounts of wasteful duplication and “noise”

- To develop and publicise meaningful quantitative metrics of progress toward LEV

- To share more openly both the successes and the failures of experiments conducted, to allow more effective collaborative learning.

The breakthrough narratives

Some observers are pessimistic about any changes any time soon in the public narrative about the desirability of reaching LEV. These observers say they have been awaiting such a change for years or even decades, without it happening.

Part of the answer is that experimental results will make people pay attention. When middle-aged mice have their remaining life expectancy doubled – and then when similar treatments become available for middle-aged pet dogs – it is going to cause a large number of “road to Damascus” conversion experiences. People will set aside their former proclaimed “acceptance” of aging and death, and will instead start to clamor for rejuvenation treatments to be made available for humans too, as soon as possible.

But another part of the answer is to develop new themes within the public conversation related to aging and death. If these new themes have sufficient innate interest, they may develop a momentum of their own.

Here are some of the potential “breakthrough narratives” that I have in mind:

- Building on top of the latest “longevity dividend” and “evergreen society” arguments in the new book by the economist Andrew Scott, The Longevity Imperative: How to Build a Healthier and More Productive Society to Support Our Longer Lives, to highlight the broader economic and social benefits of biorejuvenation treatments

- The fascinating learning that can be obtained from looking more closely at the damage repair mechanisms already utilized by some “superaging” animal species; more and more of these mechanisms are being discovered and explored, and deserve greater publicity.

- Additional learning that can be obtained from further study into human superagers. Note that, for evolutionary reasons, it is likely that different superaging families around the world employ different biological damage repair mechanisms.

- The RMR narrative that the particularly useful data to collect is that from the combination of multiple treatments administered in mid-life; as this data accumulates, it is likely to give rise to lots of new theories about interactions between these treatments.

- The attractiveness of extending the RMR projects (for the robust rejuvenation of middle-aged mice) to similar investigations that might be called RDR (focused on dogs) and RSR (focused on simians, that is, monkeys and apes).

- The ups and downs of the various teams that are entering the XPrize Healthspan – a contest that can be seen as promoting an “RHR” extension (the ‘H’ for “human) to the RMR / RDR / RSR progression mentioned above

- A new analysis to supersede the existing “hallmarks of aging” diagrams, with a richer model of the interactions between different types of aging damage and the different possible damage repair mechanisms.

- Exploration of some “left field” rejuvenation interventions, such as those of Jean Hébert about growing and using replacement organs (including gradual replacement of parts of our brains), and those of Michael Levin about the ways in which the electrome can trigger biorejuvenation.

- The new possibilities that are continuing to emerge that take advantage of CRISPR-style genetic reprogramming and the reprogramming of epigenetics by Yamanaka factors or other means.

- More powerful AI platforms can enable faster advances in fields of science than were previously expected; examples include AlphaFold by DeepMind and the so-called menagerie of AI models utilised by Insilico Medicine

- Further championing of the ideas of anti-death philosopher Ingemar Patrick Linden from his book The Case Against Death.

- Engaging new video versions of some of the above narratives, in the manner of the CGP Grey video of Nick Bostrom’s allegory “Fable of the Dragon Tyrant” and those in the “Aging” YouTube playlist of Andrew Steele.

A probability, not a certainty

As I said earlier, there’s nothing inevitable about the longevity community experiencing the kind of tipping point and phase transition that I have described above.

Instead, I estimate the probability of humanity reaching LEV by 2040 to be less than 50%, although more than 25%. That’s because there are plenty of things that can go wrong along the way:

- Distractions and loss of focus

- Too much infighting and lack of constructive collaboration

- A decline in the understanding and use of scientific methods

- The field becomes dominated by pseudoscience, uncritical hero-worship, snake-oil, or wishful thinking

- A growth of societal irrationality and preference for conspiracy thinking

- An adverse change in the global political and geopolitical environment

- The triggering of one or more of what I have called “Landmines”.

Which set of forces will prevail – the ones highlighted by the optimists, or those highlighted by the pessimists?

Frankly, it’s still too early to tell. But each of us can and should help to influence the outcome, by finding the roles where we can make the biggest positive impact.