“Humanity has never faced a greater problem than itself.”

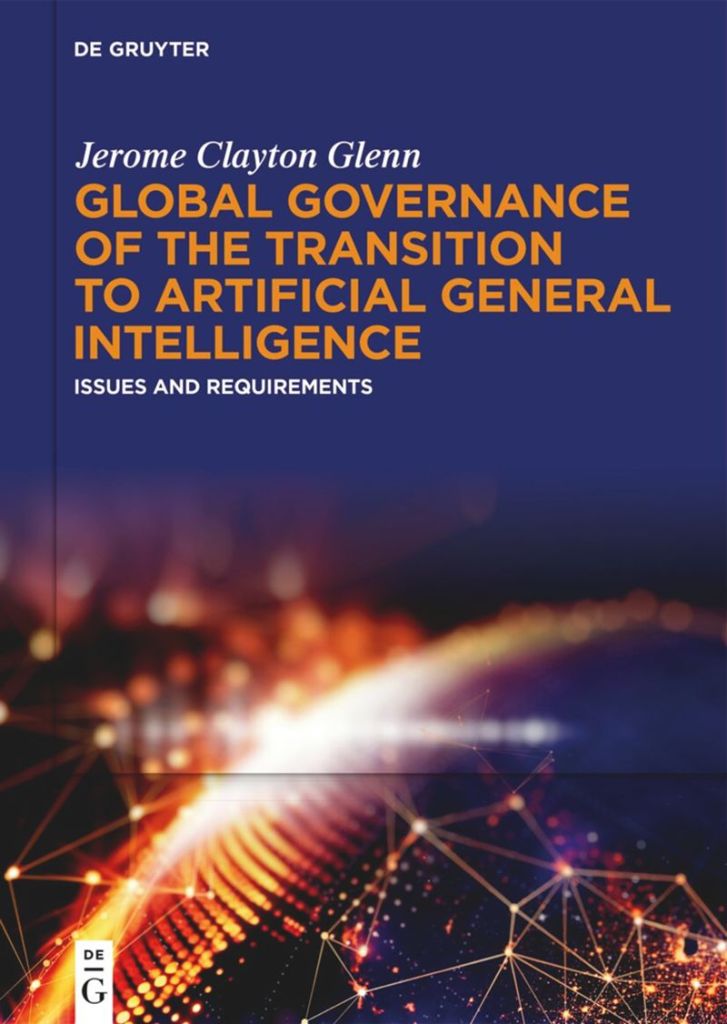

That phrase was what my brain hallucinated, while I was browsing the opening section of the Introduction of the groundbreaking new book Global Governance of the Transition to Artificial General Intelligence written by my friend and colleague Jerome C. Glenn, Executive Director of The Millennium Project.

I thought to myself: That’s a bold but accurate way of summing up the enormous challenge faced by humanity over the next few years.

In previous centuries, our biggest problems have often come from the environment around us: deadly pathogens, devastating earthquakes, torrential storms, plagues of locusts – as well as marauding hordes of invaders from outside our local neighbourhood.

But in the second half of the 2020s, our problems are being compounded as never before by our own human inadequacies:

- We’re too quick to rush to judgement, seeing only parts of the bigger picture

- We’re too loyal to the tribes to which we perceive ourselves as belonging

- We’re overconfident in our ability to know what’s happening

- We’re too comfortable with manufacturing and spreading untruths and distortions

- We’re too bound into incentive systems that prioritise short-term rewards

- We’re too fatalistic, as regards the possible scenarios ahead.

You may ask, What’s new?

What’s new is the combination of these deep flaws in human nature with technology that is remarkably powerful yet opaque and intractable. AI that is increasingly beyond our understanding and beyond our control is being coupled in potentially devastating ways with our over-hasty, over-tribal, over-confident thoughts and actions. New AI systems are being rushed into deployment and used in attempts:

- To manufacture and spread truly insidious narratives

- To incentivize people around the world to act against their own best interests, and

- To resign people to inaction when in fact it is still within their power to alter and uplift the trajectory of human destiny.

In case this sounds like a counsel of despair, I should clarify at once my appreciation of aspects of human nature that are truly wonderful, as counters to the negative characteristics that I have already mentioned:

- Our thoughtfulness, that can counter rushes to judgement

- Our collaborative spirit, that can transcend partisanship

- Our wisdom, that can recognise our areas of lack of knowledge or lack of certainty

- Our admiration for truth, integrity, and accountability, that can counter ends-justify-the-means expediency

- Our foresight, that can counter short-termism and free us from locked-in inertia

- Our creativity, to imagine and then create better futures.

Just as AI can magnify the regrettable aspects of human nature, so also it can, if used well, magnify those commendable aspects.

So, which is it to be?

The fundamental importance of governance

The question I’ve just asked isn’t a question that can be answered by individuals alone. Any one group – whether an organisation, a corporation, or a decentralised partnership – can have its own beneficial actions overtaken and capsized by catastrophic outcomes of groups that failed to heed the better angels of their nature, and which, instead, allowed themselves to be governed by wishful naivety, careless bravado, pangs of jealousy, hostile alienation, assertive egotism, or the madness of the crowd.

That’s why the message of this new book by Jerome Glenn is so timely: the processes of developing and deploying increasingly capable AIs are something that needs to be:

- Governed, rather than happening chaotically

- Globally coordinated, rather than there being no cohesion between the different governance processes applicable in different localities

- Progressed urgently, without being shut out of mind by all the shorter-term issues that, understandably, also demand governance attention.

Before giving more of my own thoughts about this book, let me share some of the commendations it has received:

- “This book is an eye-opening study of the transition to a completely new chapter of history.” – Csaba Korösi, 77th President of the UN General Assembly

- “A comprehensive overview, drawing both on leading academic and industry thinkers worldwide, and valuable perspectives from within the OECD, United Nations.” – Jaan Tallinn, founding engineer, Skype and Kazaa; co-founder, Cambridge Centre for the Study of Existential Risk and the Future of Life Institute

- “Written in lucid and accessible language, this book is a must read for people who care about the governance and policy of AGI.” – Lan Xue, Chair of the Chinese National Expert Committee on AI Governance.

The book also carries an absorbing foreword by Ben Goertzel. In this foreword, Ben introduces himself as follows:

Since the 1980s, I have been immersed in the field of AI, working to unravel the complexities of intelligence and to build systems capable of emulating it. My journey has included introducing and popularizing the concept of AGI, developing innovative AGI software frameworks such as OpenCog, and leading efforts to decentralize AI development through initiatives like SingularityNET and the ASI Alliance. This work has been driven by an understanding that AGI is not just an engineering challenge but a profound societal pivot point – a moment requiring foresight, ethical grounding, and global collaboration.

He clarifies why the subject of the book is so important:

The potential benefits of AGI are vast: solutions to climate change, the eradication of diseases, the enrichment of human creativity, and the possibility of postscarcity economies. However, the risks are equally significant. AGI, wielded irresponsibly or emerging in a poorly aligned manner, could exacerbate inequalities, entrench authoritarianism, or unleash existential dangers. At this critical juncture, the questions of how AGI will be developed, governed, and integrated into society must be addressed with both urgency and care.

The need for a globally participatory approach to AGI governance cannot be overstated. AGI, by its nature, will be a force that transcends national borders, cultural paradigms, and economic systems. To ensure its benefits are distributed equitably and its risks mitigated effectively, the voices of diverse communities and stakeholders must be included in shaping its development. This is not merely a matter of fairness but a pragmatic necessity. A multiplicity of perspectives enriches our understanding of AGI’s implications and fosters the global trust needed to govern it responsibly.

He then offers wide praise for the contents of the book:

This is where the work of Jerome Glenn and The Millennium Project may well prove invaluable. For decades, The Millennium Project has been at the forefront of fostering participatory futures thinking, weaving together insights from experts across disciplines and geographies to address humanity’s most pressing challenges. In Governing the Transition to Artificial General Intelligence, this expertise is applied to one of the most consequential questions of our time. Through rigorous analysis, thoughtful exploration of governance models, and a commitment to inclusivity, this book provides a roadmap for navigating the complexities of AGI’s emergence.

What makes this work particularly compelling is its grounding in both pragmatism and idealism. It does not shy away from the technical and geopolitical hurdles of AGI governance, nor does it ignore the ethical imperatives of ensuring AGI serves the collective good. It recognizes that governing AGI is not a task for any single entity but a shared responsibility requiring cooperation among nations, corporations, civil society, and, indeed, future AGI systems themselves.

As we venture into this new era, this book reminds us that the transition to AGI is not solely about technology; it is about humanity, and about life, mind, and complexity in general. It is about how we choose to define intelligence, collaboration, and progress. It is about the frameworks we build now to ensure that the tools we create amplify the best of what it means to be human, and what it means to both retain and grow beyond what we are.

My own involvement

To fill in some background detail: I was pleased to be part of the team that developed the set of 22 critical questions which sat at the heart of the interviews and research which are summarised in Part I of the book – and I conducted a number of the resulting interviews. In parallel, I explored related ideas via two different online Transpolitica surveys:

- “Key open questions about the transition to AGI”, June-August 2023

- “Anticipating AI in 2030”, September-December 2023

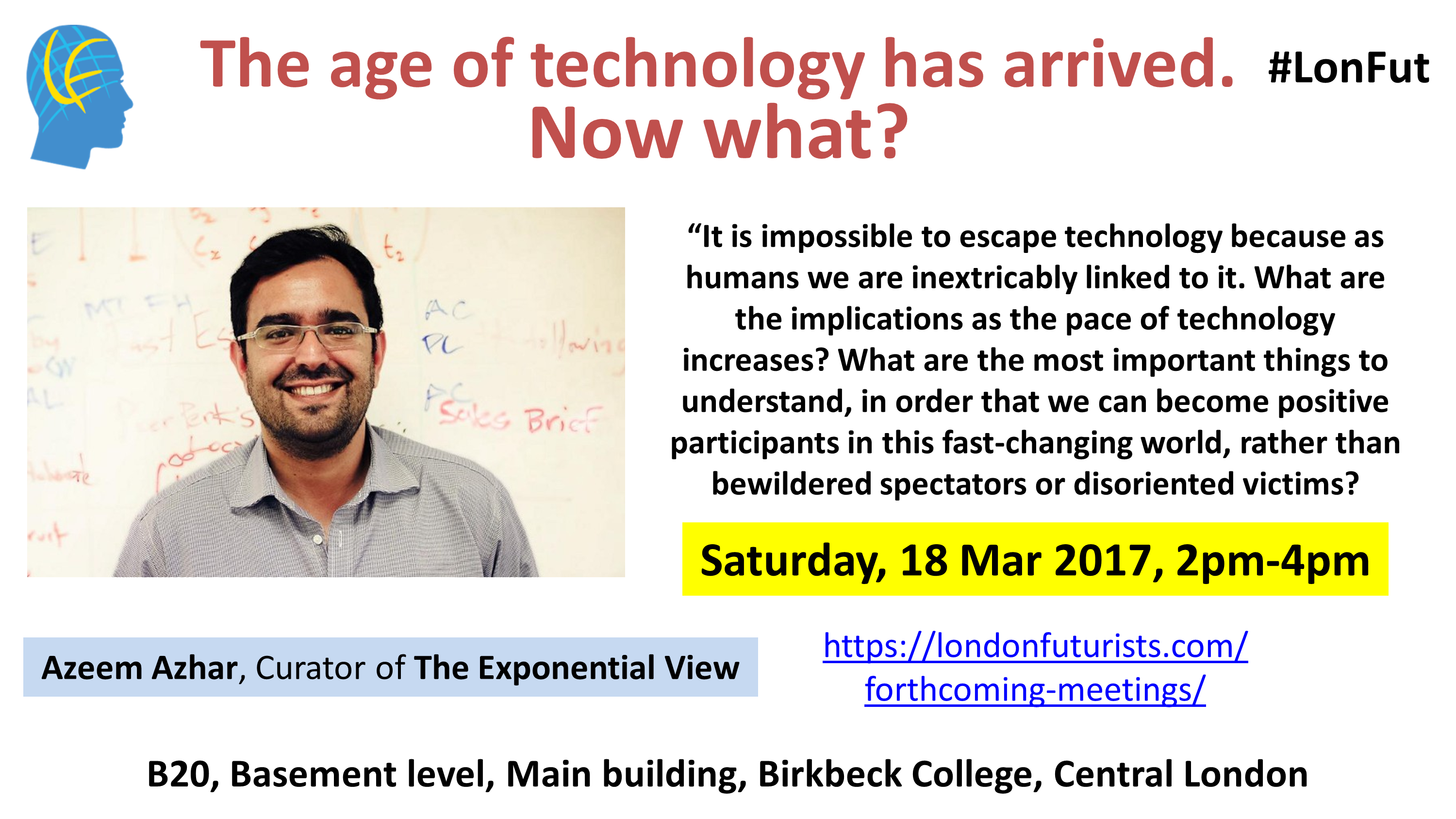

And I’ve been writing roughly one major article (or giving a public presentation) on similar topics every month since then. Recent examples include:

- “How to build BGIs instead of CGIs”

- “Preventing unsafe superintelligence: four choices”

- “Measuring what truly matters”

Over this time period, my views have evolved. I see the biggest priority, nowadays, not as figuring out how to govern AGI as it comes into existence, but rather, how to pause the development and deployment of any new types of AI that could spark the existence of self-improving AGI.

That global pause needs to last long enough that the global community can justifiably be highly confident that any AGI that will subsequently be built will be what I have called a BGI (a Beneficial General Intelligence) rather than a CGI (a Catastrophic General Intelligence).

Govern AGI and/or Pause the development of AGI?

I recently posted a diagram on various social media platforms to illustrate some of the thinking behind that stance of mine:

Alongside that diagram, I offered the following commentary:

The next time someone asks me what’s my p(Doom), compared with my p(SSfA) (the probability of Sustainable Superabundance for all), I may try to talk them through a diagram like this one. In particular, we need to break down the analysis into two cases – will the world keep rushing to build AGI, or will it pause from that rush.

To explain some points from the diagram:

We can reach the very desirable future of SSfA by making wise use of AI only modestly more capable than what we have today;

We might also get there as a side-effect of building AGI, but that’s very risky.None of the probabilities are meant to be considered precise. They’re just ballpark estimates.

I estimate around 2/3 chance that the world will come to its senses and pause its current headlong rush toward building AGI.

But even in that case, risks of global catastrophe remain.

The date 2045 is also just a ballpark choice. Either of the “singularity” outcomes (wonderful or dreadful) could arrive a lot sooner than that.

The 1/12 probability I’ve calculated for “stat” (I use “stat” here as shorthand for a relatively unchanged status quo) by 2045 reflects my expectation of huge disruptions ahead, one sort or another.

The overall conclusion: if we want SSfA, we’re much more likely to get it via the “pause AGI” branch than via the “headlong rush to AGI” branch.

And whilst doom is possible in either branch, it’s much more likely in the headlong rush branch.

For more discussion of how to get the best out of AI and other cataclysmically disruptive technologies, see my book The Singularity Principles (the entire contents are freely available online).

Feel free to post your own version of this diagram, with your own estimates of the various conditional probabilities.

As indicated, I was hoping for feedback, and I was pleased to see a number of comments and questions in response.

One excellent question was this, by Bill Trowbridge:

What’s the difference between:

(a) better AI, and

(b) AGIThe line is hard to draw. So, we’ll likely just keep making better AI until it becomes AGI.

I offered this answer:

On first thought, it may seem hard to identify that distinction. But thankfully, we humans don’t just throw up our hands in resignation every time we encounter a hard problem.

For a good starting point on making the distinction, see the ideas in “A Narrow Path” by Control AI.

But what surprised me the most was the confidence expressed by various online commenters that:

- “A pause however desirable is unlikely: p(pause) = 0.01”

- “I am confident in saying this – pause is not an option. It is actually impossible.”

- “There are several organisations working on AI development and at least some of them are ungovernable [hence a pause can never be global]”.

There’s evidently a large gulf behind the figure of 2/3 that I suggested for P(pause), and the views of these clearly intelligent respondents.

Why a pause isn’t that inconceivable

I’ll start my argument on this topic by confirming that I see this discussion as deeply important. Different viewpoints are welcome, provided they are held thoughtfully and offered honestly.

Next, although it’s true that some organisations may appear to be ungovernable, I don’t see any fundamental issue here. As I said online,

“Given sufficient public will and/or political will, no organisation is ungovernable.”

Witness the compliance by a number of powerful corporations in both China and the US to control measures declared by national governments.

Of course, smaller actors and decentralized labs pose enforcement challenges, but these labs are less likely to be able to marshal sufficient computing capabilities to be the first to reach breakthrough new levels of capability, especially if decentralised monitoring of dangerous attributes is established.

I’ve drawn attention on previous occasions to the parallel with the apparent headlong rush in the 1980s toward nuclear weapons systems that were ever more powerful and ever more dangerous. As I explained at some length in the “Geopolitics” chapter of my 2021 book Vital Foresight, it was an appreciation of the horrific risks of nuclear winter (first articulated in the 1980s) that helped to catalyse a profound change in attitude amongst the leadership camps in both the US and the USSR.

It’s the wide recognition of risk that can provide the opportunity for governments around the world to impose an effective pause in the headlong rush toward AGI. But that’s only one of five steps that I believe are needed:

- Awareness of catastrophic risks

- Awareness of bottlenecks

- Awareness of mechanisms for verification and control

- Awareness of profound benefits ahead

- Awareness of the utility of incremental progress

Here are more details about these five steps I envision:

- Clarify in an undeniable way how superintelligent AIs could pose catastrophic risks of human disaster within just a few decades or even within years – so that this topic receives urgent high-priority public attention

- Highlight bottlenecks and other locations within the AI production pipeline where constraints can more easily be applied (for example, distribution of large GPU chip clusters, and the few companies that are providing unique services in the creation of cutting-edge chips)

- Establish mechanisms that go beyond “trust” to “trust and verify”, including robust independent monitors and auditors, as well as tamperproof remote shut-down capabilities

- Indicate how the remarkable benefits anticipated for humanity from aspects of superintelligence can be secured, more safely and more reliably, by applying the governance mechanisms of points 2 and 3 above, rather than just blindly trusting in a no-holds-barred race to be the first to create superintelligence

- Be prepared to start with simpler agreements, involving fewer signatories and fewer control points, and be ready to build up stronger governance processes and culture as public consensus and understanding moves forward.

Critics can assert that each of these five steps is implausible. In each case, there are some crunchy discussions to be had. What I find dangerous, however, isn’t when people disagree with my assessments on plausibility. It’s when they approach the questions with what seems to be

- A closed mind

- A tribal loyalty to their perceived online buddies

- Overconfidence that they already know all relevant examples and facts in this space

- A willingness to distract or troll, or to offer arguments not in good faith

- A desire to protect their flow of income, rather than honestly review new ideas

- A resignation to the conclusion that humanity is impotent.

(For analysis of a writer who displays several of these tendencies, see my recent blogpost on the book More Everything Forever by Adam Beck.)

I’m not saying any of this will be easy! It’s probably going to be humanity’s hardest task over our long history.

As an illustration of points worthy of further discussion, I offer this diagram that highlights strengths and weakness of both the “governance” and “pause” approaches:

| Dimension | Governance (Continue AGI Development with Oversight) | Pause (Moratorium on AGI Development) |

| Core Strategy | Implement global rules, standards, and monitoring while AGI is developed | Impose a temporary but enforceable pause on new AGI-capable systems until safety can be assured |

| Assumptions | Governance structures can keep pace with AI progress; Compliance can be verified | Public and political will can enforce a pause; Technical progress can be slowed |

| Benefits | Encourages innovation while managing risks; Allows early harnessing of AGI for societal benefit; Promotes global collaboration mechanisms | Buys time to improve safety research; Reduces risk of premature, unsafe AGI; Raises chance of achieving Beneficial General Intelligence (BGI) instead of CGI |

| Risks | Governance may be too slow, fragmented, or under-enforced; Race dynamics could undermine agreements; Possibility of catastrophic failure despite regulation | Hard to achieve global compliance; Incentives for “rogue” actors to defect, in the absence of compelling monitoring; Risk of stagnation or loss of trust in governance processes |

| Implementation Challenges | Requires international treaties; Robust verification and auditing mechanisms; Balancing national interests vs. global good | Defining what counts as “AGI-capable” research; Enforcing restrictions across borders and corporations; Maintaining pause momentum without indefinite paralysis |

| Historical Analogies | Nuclear Non-Proliferation Treaty (NPT); Montreal Protocol (ozone layer); Financial regulation frameworks | Nuclear test bans; Moratoria on human cloning research; Apollo program wind-down (pause in space race intensity) |

| Long-Term Outcomes (if successful) | Controlled and safer path to AGI; Possibility of Sustainable Superabundance but with higher risk of misalignment | Higher probability of reaching Sustainable Superabundance safely, but risks innovation slowdown or “black market” AGI |

In short, governance offers continuity and innovation but with heightened risks of misalignment, whereas a pause increases the chances of long-term safety but faces serious feasibility hurdles.

Perhaps the best way to loosen attitudes, to allow a healthier conversation on the above points and others arising, is exposure to a greater diversity of thoughtful analysis.

And that brings me back to Global Governance of the Transition to Artificial General Intelligence by Jerome Glenn.

A necessary focus

Jerome’s book contains his personal stamp all over. His is a unique passion – that the particular risks and issues of AGI should not be swept into a side-discussion about the risks and issues of today’s AI. These latter discussions are deeply important too, but time and again, they result in existential questions about AGI being kicked down the road for months or even years. That’s something Jerome regularly challenges, rightly, and with vigour and intelligence.

Jerome’s presence is felt all over the book in one other way – he has painstakingly curated and augmented the insights of scores of different contributors and reviewers, including

- Insights from 55 AGI experts and thought leaders across six major regions – the United States, China, the United Kingdom, Canada, the European Union, and Russia

- The online panel of 229 participants from the global community around The Millennium Project who logged into a Real Time Delphi study of potential solutions to AGI governance, and provided at least one answer

- Chairs and co-chairs of the 70 nodes of The Millennium Project worldwide, who provided additional feedback and opinion.

The book therefore includes many contradictory suggestions, but Jerome has woven these different threads of thoughts into a compelling unified tapestry.

The result is a book that carries the kind of pricing normally reserved for academic text books (as insisted by the publisher). My suggestion to you is that you recommend your local library to obtain a copy of what is a unique collection of ideas.

Finally, about my hallucination, mentioned at the start of this review. On double-checking, I realise that Jerome’s statement is actually, “Humanity has never faced a greater intelligence than itself.” The opening paragraph of that introduction continues,

Within a few years, most people reading these words will live with such superior artificial nonhuman intelligence for the rest of their lives. This book is intended to help us shape that intelligence or, more likely, those intelligences as they emerge.

Shaping the intelligence of the AI systems that are on the point of emerging is, indeed, a vital task.

And as Ben Goertzel says in his Foreword,

These are fantastic and unprecedented times, in which the impending technological singularity is no longer the province of visionaries and outsiders but almost the standard perspective of tech industry leaders. The dawn of transformative intelligence surpassing human capability – the rise of artificial general intelligence, systems capable of reasoning, learning, and innovating across domains in ways comparable to, or beyond, human capabilities – is now broadly accepted as a reasonably likely near-term eventuality, rather than a vague long-term potential.

The moral, social, and political implications of this are at least as striking as the technological ones. The choices we make now will define not only the future of technology but also the trajectory of our species and the broader biosphere.

To which I respond: whether we make these choices well or badly will depend on which aspects of humanity we allow to dominate our global conversation. Will humanity turn out to be its own worst enemy? Or its own best friend?

Postscript: Opportunity at the United Nations

Like it or loathe it, the United Nations still represents one of the world’s best venues where serious international discussion can, sometimes, take place on major issues and risks.

From 22nd to 30th September, the UNGA (United Nations General Assembly) will be holding what it calls its “high-level week”. This includes a multi-day “General Debate”, described as follows:

At the General Debate – the annual meeting of Heads of State and Government at the beginning of the General Assembly session – world leaders make statements outlining their positions and priorities in the context of complex and interconnected global challenges.

Ahead of this General Debate, the national delegates who will be speaking on behalf of their countries have the ability to recommend to the President of the UNGA that particular topics be named in advance as topics to be covered during the session. If the advisors to these delegates are attuned to the special issues of AGI safety, they should press their representative to call for that topic to be added to the schedule.

If this happens, all other countries will then be required to do their own research into that topic. That’s because each country will be expected to state its position on this issue, and no diplomat or politician wants to look uninformed. The speakers will therefore contact the relevant experts in their own country, and, ideally, will do at least some research of their own. Some countries might call for a pause in AGI development if it appears impossible to establish national licensing systems and international governance in sufficient time.

These leaders (and their advisors) would do well to read the report recently released by the UNCPGA entitled “Governance of the Transition to Artificial General Intelligence (AGI): Urgent Considerations for the UN General Assembly” – a report which I wrote about three months ago.

As I said at that time, anyone who reads that report carefully, and digs further into some of the excellent of references it contains, ought to be jolted out of any sense of complacency. The sooner, the better.

Abundance

Abundance

One further quote from McAfee’s article rams home the conclusion:

One further quote from McAfee’s article rams home the conclusion: